We solved GitHub's spam problem for them

GitHub has been having problems with spam recently.

We received 3 spam emails ourselves, and we weren't the only ones - it was brought up by this blog post by Dan Janes from over a month ago, then this Theo video, and this HN post.

It also hasn’t slowed down since then.

So we thought, why not try doing something about it ourselves? We used GitHub's events API, a real-time stream of public events, and Trench, an open-source abuse monitoring tool we built, to detect 90% of spam in real time.

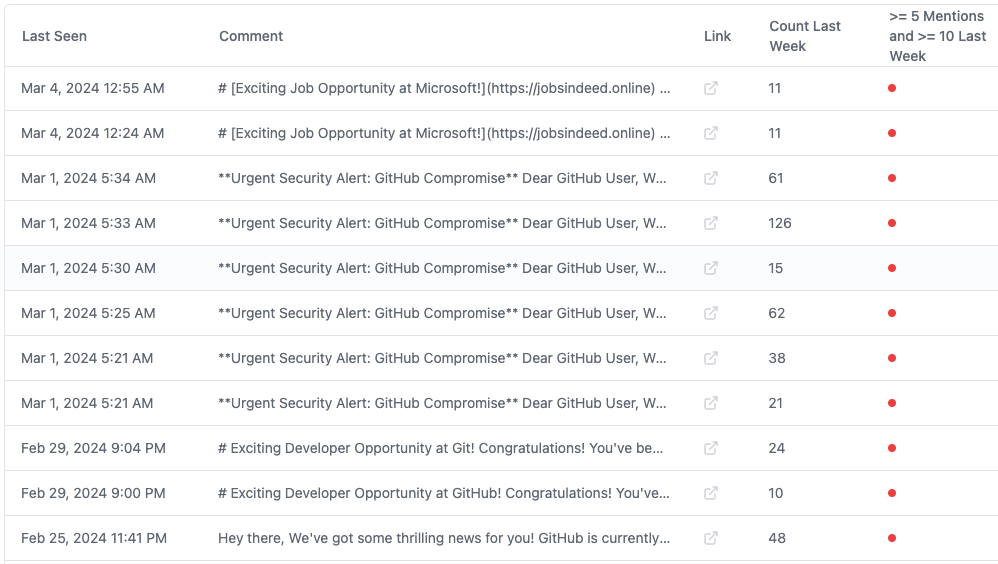

The result: now as soon as a spam comment is posted, we can instantly see it here. And if we were GitHub, we'd be able to block it too. Here are some examples of what we caught in the last week:

Here is how we did it.

But first, how does spam work?

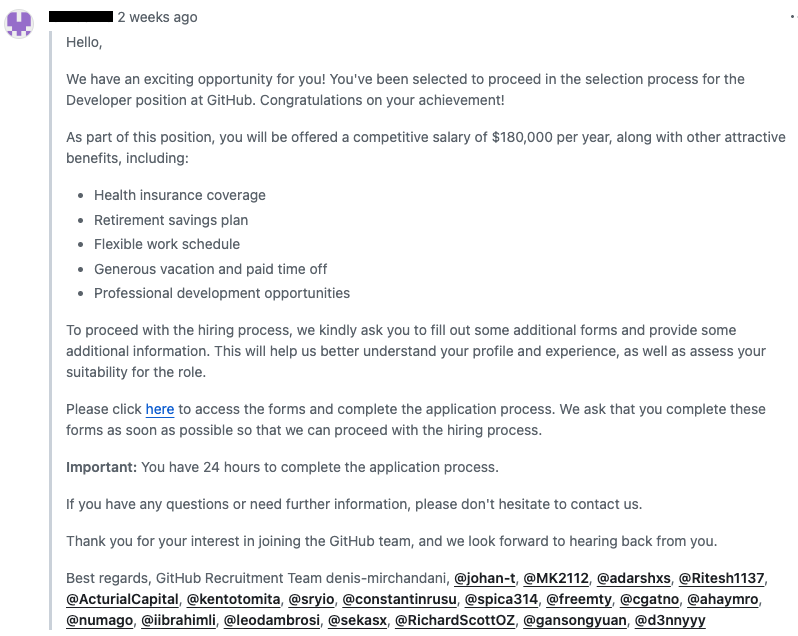

Let's look at an example:

The spammers posted a comment on a GitHub issue, triggering an email notification from GitHub to the mentioned users that looks like an urgent job invitation. When the victim clicks the phishing link, they're prompted to "login with GitHub" and to authorize an OAuth app with these permissions:

A third-party OAuth application (Jobs Indeed) with delete_repo, gist, read:org, repo, user, and write:discussion scopes was recently authorized to access your account.

If they hit authorize, their account is hijacked to post even more spam comments, and the cycle repeats.

So… how can we flag spam like this?

Step 1: Track GitHub users and duplicate comments

Trench lets you track any entity you care about and gives you dashboards to monitor them. In this case, we tracked GitHub users, duplicate comments, repos, and orgs.

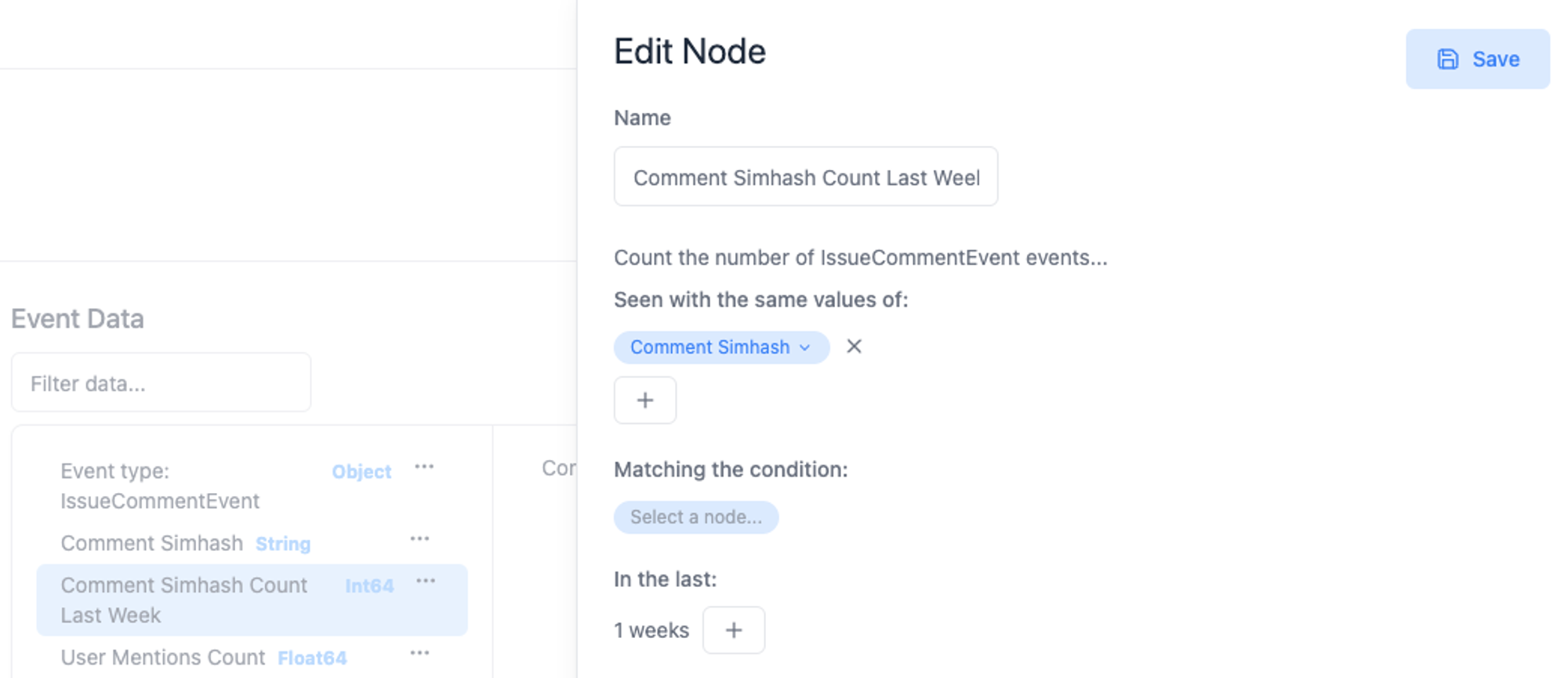

How do we track duplicate comments? We wrote a function to normalize comments by removing all the user mentions, then, we stored it's simhash, which is a hash of its content that's designed to be the same for near-duplicate content. This way, comments which are almost identical end up with the same hash, so they get grouped together.

Now in the dashboard, for any spam comment, you can see:

- all of its past activity

- all the entities (e.g. users, repos) linked to it

- similar comments that are related by those entities

- any useful signals you collected (see step 2)

Here's an example spam comment in the dashboard.

Step 2: Calculate signals

In his post, Dan Janes suggested a few ideas for identifying spam, so we started with those:

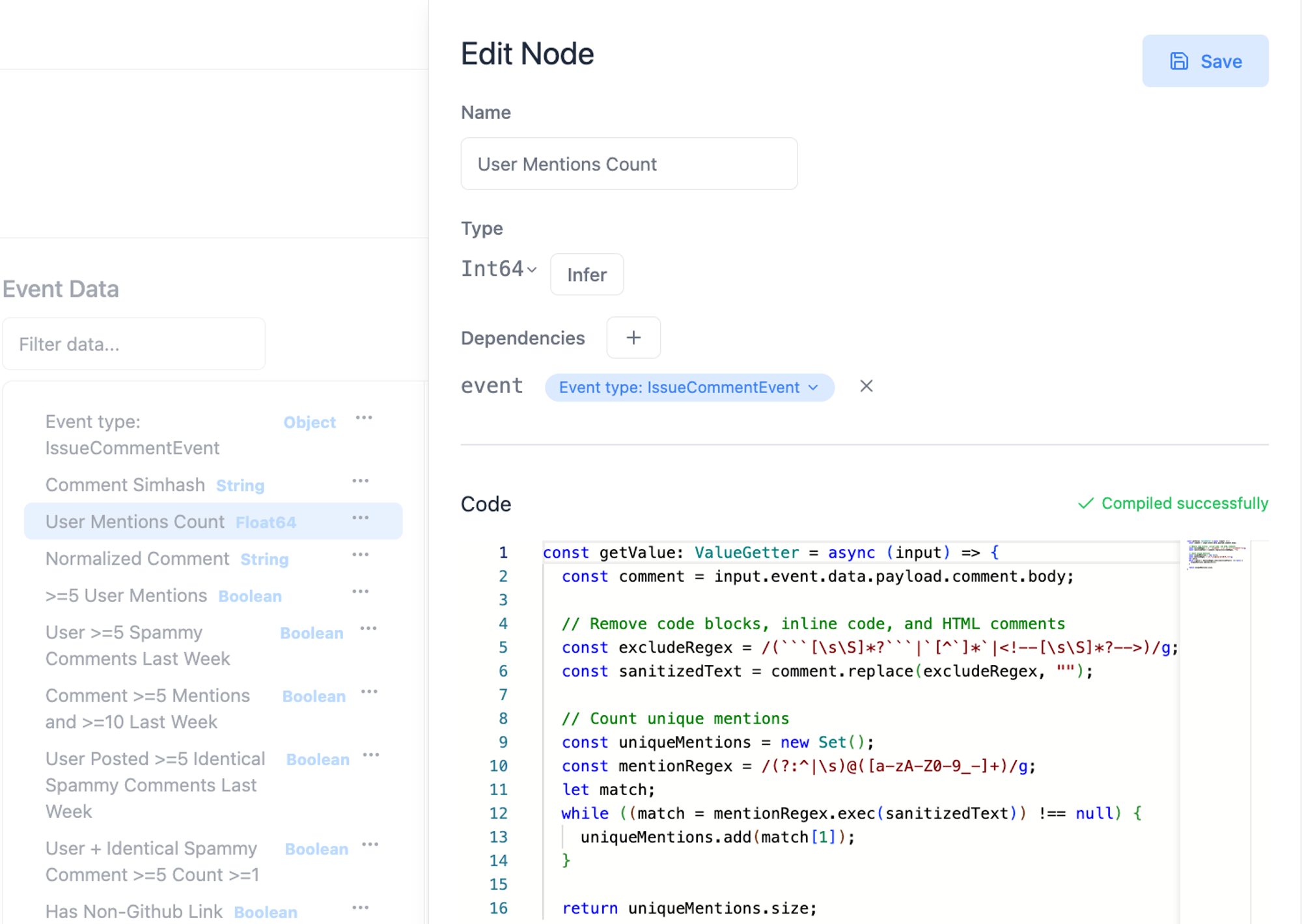

1. Flag comments that mention a lot of users.

We wrote a function in Trench to count the number of mentions for each comment.

2. Flag similar comments that are posted repeatedly across the site.

Using Trench, we count how many times a comment simhash has shown up in the past week.

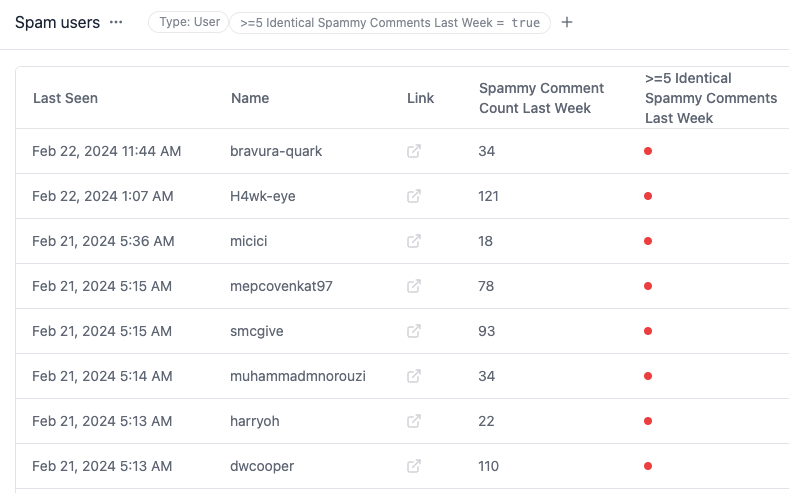

3. Flag new users with empty profiles (no repos, no profile picture, no bio).

We added an “Empty Profile” and “Mostly Empty Profile” label to users, if they’re missing profile fields like a bio, repos, achievements, organizations, etc. We also added a “New account” label to users whose accounts were created less than 60 days ago.

Once we set up these signals, we waited for new GitHub events to populate in the dashboard. Here's the final data model.

Step 3: Determine rules

The next step was to look at the data in the dashboard and to come up with rules.

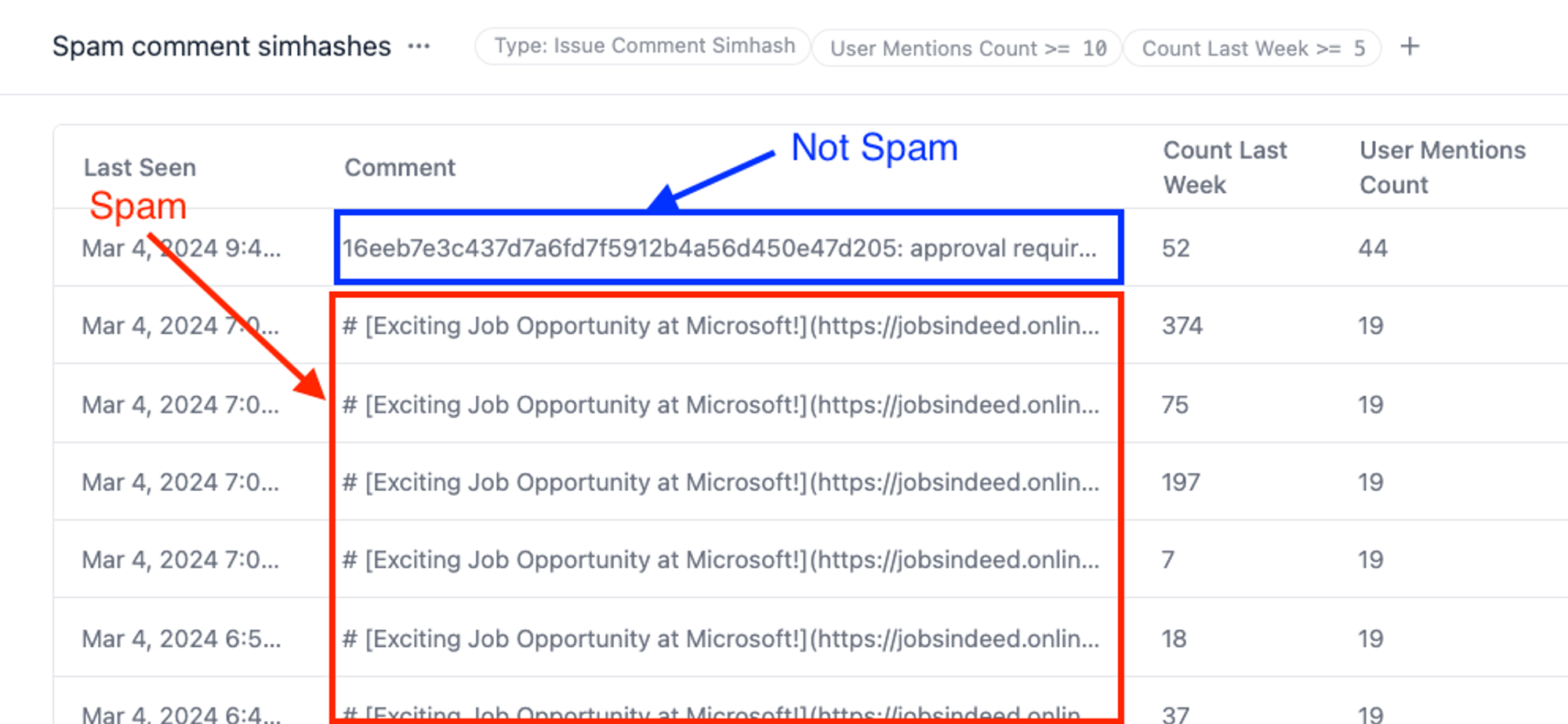

We found that filtering by ≥ 5 user mentions and ≥ 10 comment occurrences in the last week yielded a large list of spam comments, totaling to thousands of comments on GitHub over 2 weeks.

Right away, we noticed a few false positives – there are legitimate GitHub bots that send templated messages and tag a lot of people. For example, a bot could be reminding users to review a PR. Many of these bots are machine users, which means they are registered by a human being for automation purposes, and not trivially distinguishable from a normal user. We needed a way to separate these out.

Through manual inspection, we noticed these legit messages would generally not link to external domains, whereas all of the spam messages did (the phishing link). So we added an additional criteria for marking spam: has non-GitHub link. We found this to be very effective at separating spam from normal bot activity.

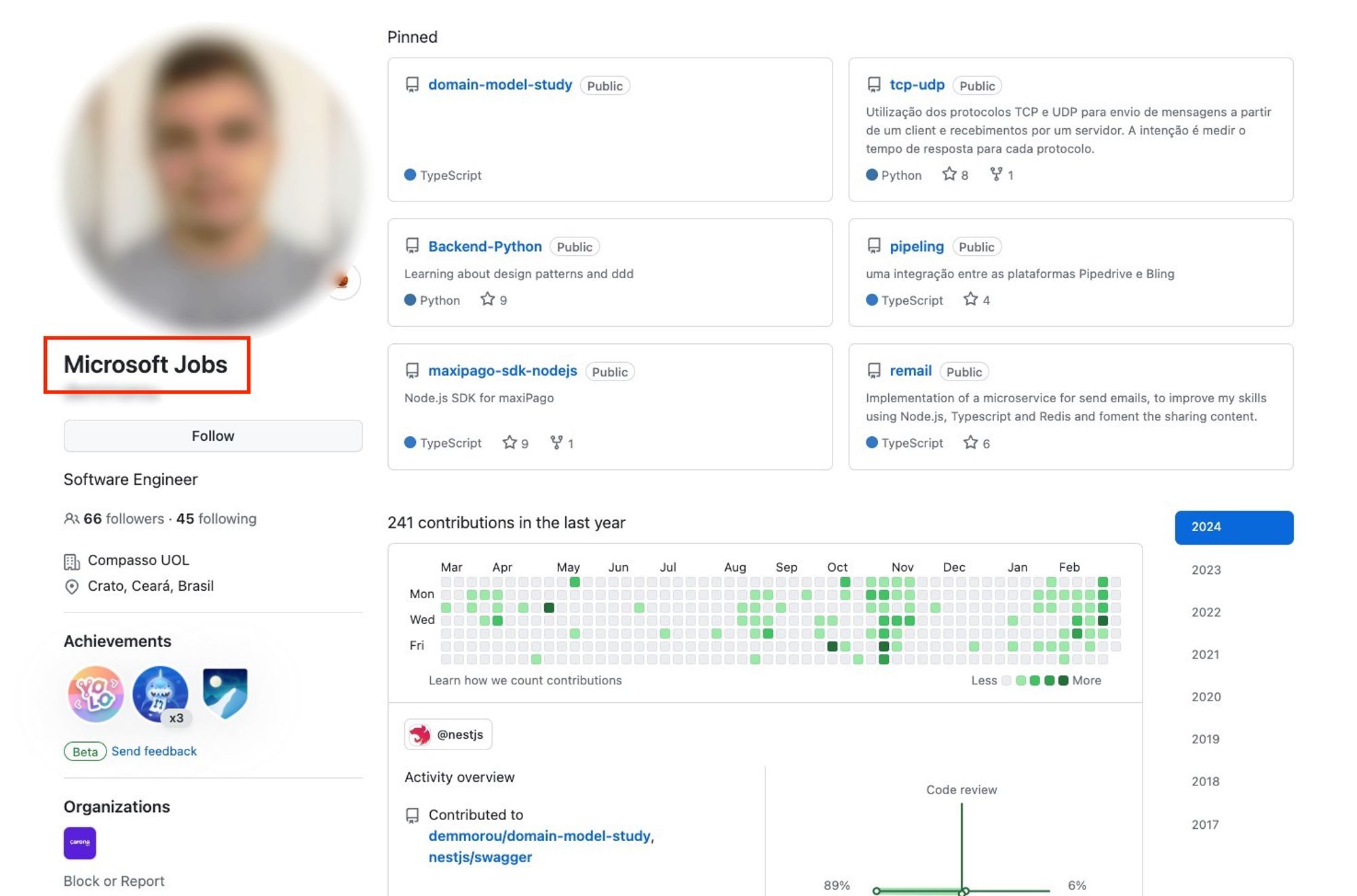

As a side note, we found that looking at user profiles wasn’t as useful. The majority of posters were actually victims of the phishing scam, so the accounts looked totally legitimate. Below is an example of a victim (whose name was modified by the attackers to “Microsoft Jobs”):

Results

See the live results here.

After running these rules for a few days, we saw thousands of spam comments similar to the earlier one (add up the numbers in the “Count Last Week” column to get the total number of spam comments.)

We noticed that using the simhash for finding near duplicate posts wasn’t perfect. Sometimes spam comments were slightly different so there would be multiple hashes for the same type of comment. Apart from that, our rules were effective. There were nearly no false positives, and if we search posts that include the spam links, we can see that we flagged over 90% of the spam posts.

Interestingly, we can now see the scale of the spam attack. In a few hours, over a thousand posts tagging 19 users each were made, spamming a total of over 20k users.

How would this work for GitHub?

Because Trench can calculate signals and rules in real time, we can use it to stop spam as soon as it starts.

For example, we can block email notifications from being sent for any comment whose simhash is flagged as spam. A more conservative approach would be simply to include cautionary text in email notifications for flagged spam to warn potential victims.

We can also flag users who have posted spam in the past. We can temporarily block or rate limit a user’s posts if, for example, they posted the same comment 5 times in the past week, and that comment mentions >5 people and has non-GitHub links.

See the live results here.

Bonus round: Fake stars

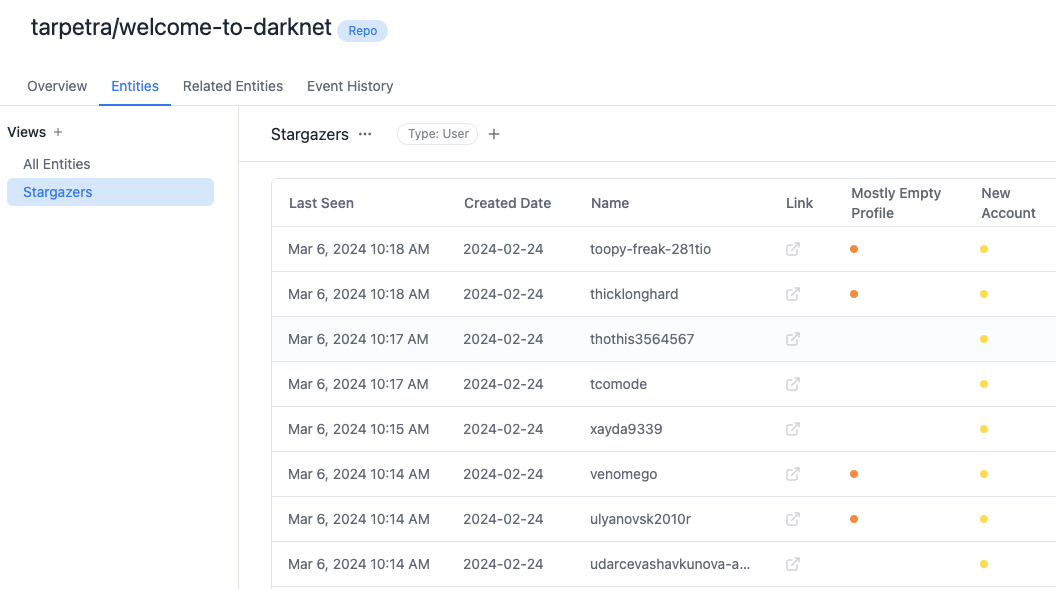

For additional fun, we're going to look for blatant instances of repos with fake stars. We'll do this by flagging repos with a high ratio (over 80%) of stars from fake-looking users. These are users who have mostly empty profiles or who created their account less than 60 days ago.

In Trench, for each new star on GitHub, we fetch the user’s profile information and flag the ones that look fake. Then we calculate the percentage of stars a repo has from “fake users”. You can see the live results here.

You'll notice there's a surprising number of these repos, and many of them are related to repos against GitHub TOS like cracked software.

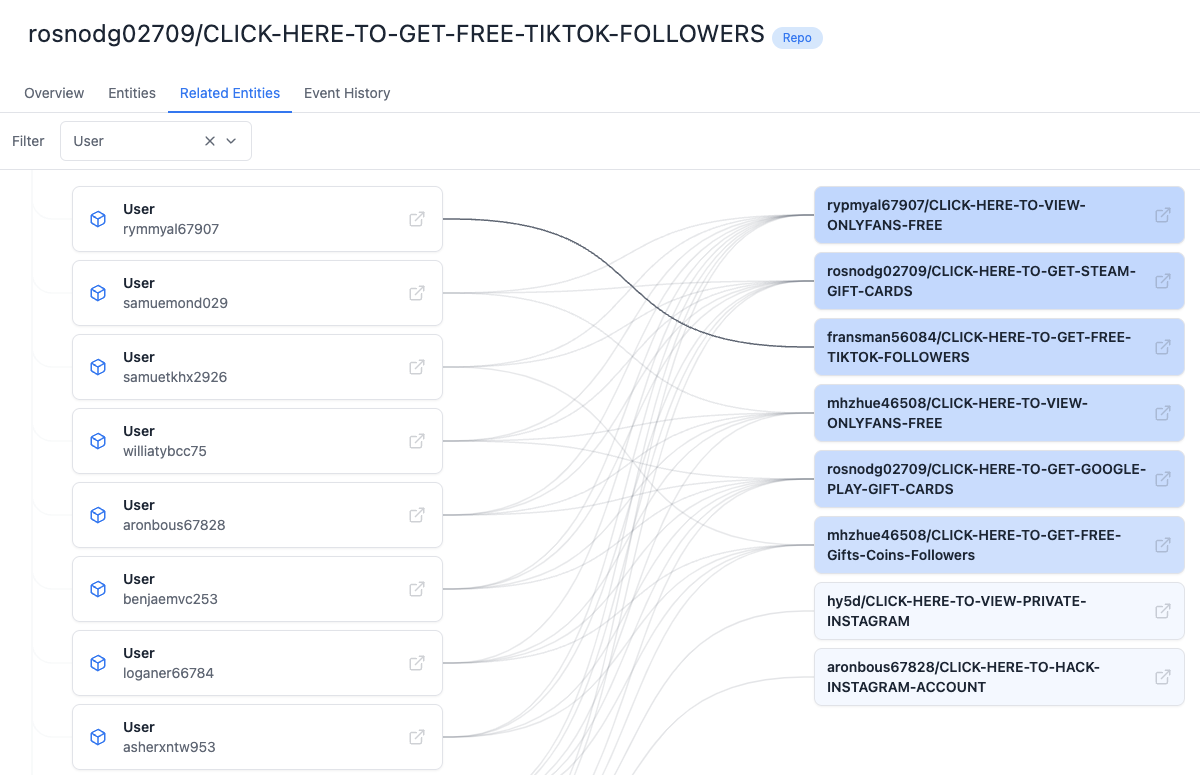

We can also see that a lot of the repos with fake stars share the same users, like this one:

Conclusion

Stopping spam and abuse is a difficult task, as attackers are constantly inventing new strategies. At the time of posting this article, many of the spam comments and fake-star repos we found have already been identified and deleted by GitHub. This deep-dive gave us a bigger appreciation for the work being done behind the scenes by the GitHub Trust and Safety team, as well as the community for diligently reporting spam.

We saw that fraudsters strike fast and hard. Attacks occur over just a few days, or even hours, and impact tens of thousands of users. Oftentimes, by the time the attack is addressed, much of the damage has already been done.

Our goal with building Trench is to improve response times for Trust and Safety teams from weeks to hours, and even minutes in some cases. We’re starting with an open-source observability tool that‘s flexible enough to detect fraud and abuse in any application, and also performant enough to act in real time.